2009-07-19

22:35

Several

people

store

geographical points within CouchDB and would like to make a

bounding box

query on them. This isn’t possible with plain CouchDB

_views. But there’s

light at the end of the tunnel. One solution will be

GeoCouch

(which can do a lot more than simple bounding box queries), once there’s a new

release, the other one is already there: you can use a the

list/show

API (Warning: the current wiki page (as at 2009-07-19) applies to CouchDB 0.9, I use the new 0.10 API).

You can either add a _list function as described in the

documentation or use my

futon-list

branch which includes an interface for easier _list function creation/editing.

Your data

The _list function needs to match your data, thus I expect documents with

a field named location which contains an array with the

coordinates. Here’s a simple example document:

{

"_id": "00001aef7b72e90b991975ef2a7e1fa7",

"_rev": "1-4063357886",

"name": "Augsburg",

"location": [

10.898333,

48.371667

],

"some extra data": "Zirbelnuss"

}

The _list function

We aim at creating a _list function that returns the same response as a

normal _view would return, but filtered with a bounding box. Let’s start

with a _list function which returns the same results as plain _view (no

bounding box filtering, yet). The whitespaces of the output differ slightly.

function(head, req) {

var row, sep = '\n';

// Send the same Content-Type as CouchDB would

if (req.headers.Accept.indexOf('application/json')!=-1)

start({"headers":{"Content-Type" : "application/json"}});

else

start({"headers":{"Content-Type" : "text/plain"}});

send('{"total_rows":' + head.total_rows +

',"offset":'+head.offset+',"rows":[');

while (row = getRow()) {

send(sep + toJSON(row));

sep = ',\n';

}

return "\n]}";

};

The _list API allows to you add any arbitrary query string to the URL. In

our case that will be bbox=west,south,east,north (adapted from the

OpenSearch

Geo Extension). Parsing the bounding box is really easy. The query

parameters of the request are stored in the property req.query as

key/value pairs. Get the bounding box, split it into separate values and

compare it with the values of every row.

var row, location, bbox = req.query.bbox.split(',');

while (row = getRow()) {

location = row.value.location;

if (location[0]>bbox[0] && location[0]<bbox[2] &&

location[1]>bbox[1] && location[1]<bbox[3]) {

send(sep + toJSON(row));

sep = ',\n';

}

}

And finally we make sure that no error message is thrown when the

bbox query parameter is omitted. Here’s the final result:

function(head, req) {

var row, bbox, location, sep = '\n';

// Send the same Content-Type as CouchDB would

if (req.headers.Accept.indexOf('application/json')!=-1)

start({"headers":{"Content-Type" : "application/json"}});

else

start({"headers":{"Content-Type" : "text/plain"}});

if (req.query.bbox)

bbox = req.query.bbox.split(',');

send('{"total_rows":' + head.total_rows +

',"offset":'+head.offset+',"rows":[');

while (row = getRow()) {

location = row.value.location;

if (!bbox || (location[0]>bbox[0] && location[0]<bbox[2] &&

location[1]>bbox[1] && location[1]<bbox[3])) {

send(sep + toJSON(row));

sep = ',\n';

}

}

return "\n]}";

};

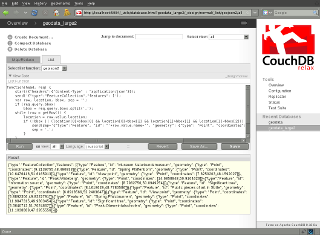

An example how to access your _list function would be:

http://localhost:5984/geodata/_design/designdoc/_list/bbox/viewname?bbox=10,0,120,90&limit=10000

Now you should be able to filter any of your point clouds with a bounding

box. The performance should be alright for a reasonable number of points. A

usual use-case would something like displaying a few points on a map, where you

don’t want to see zillions of them anyway.

Stay tuned for a follow-up posting about displaying points with

OpenLayers.

Categories:

en,

CouchDB,

JavaScript,

geo